Finding OpenAI ChatGPT and Beyond

Exploring the land of GPTs and prompt engineering the OpenAI API

ChatGPT is a large language model which is trained by OpenAI so that it interacts with the users conversationally. Let's take a look at one example.

To understand how ChatGPT was trained it is useful to understand how InstructGPT was trained by OpenAI. InstructGPT is trained to follow instructions in a prompt and provide a detailed response. Let's go through the paper and gather more information on this.

Training InstructGPT

The paper is called "Training Language models to follow instructions with human feedback". The paper was published in March 2022 about nine months before OpenAI released ChatGPT.

The main essence of this paper is to align language models with user intent on a wide range of tasks by fine-tuning them with human feedback.

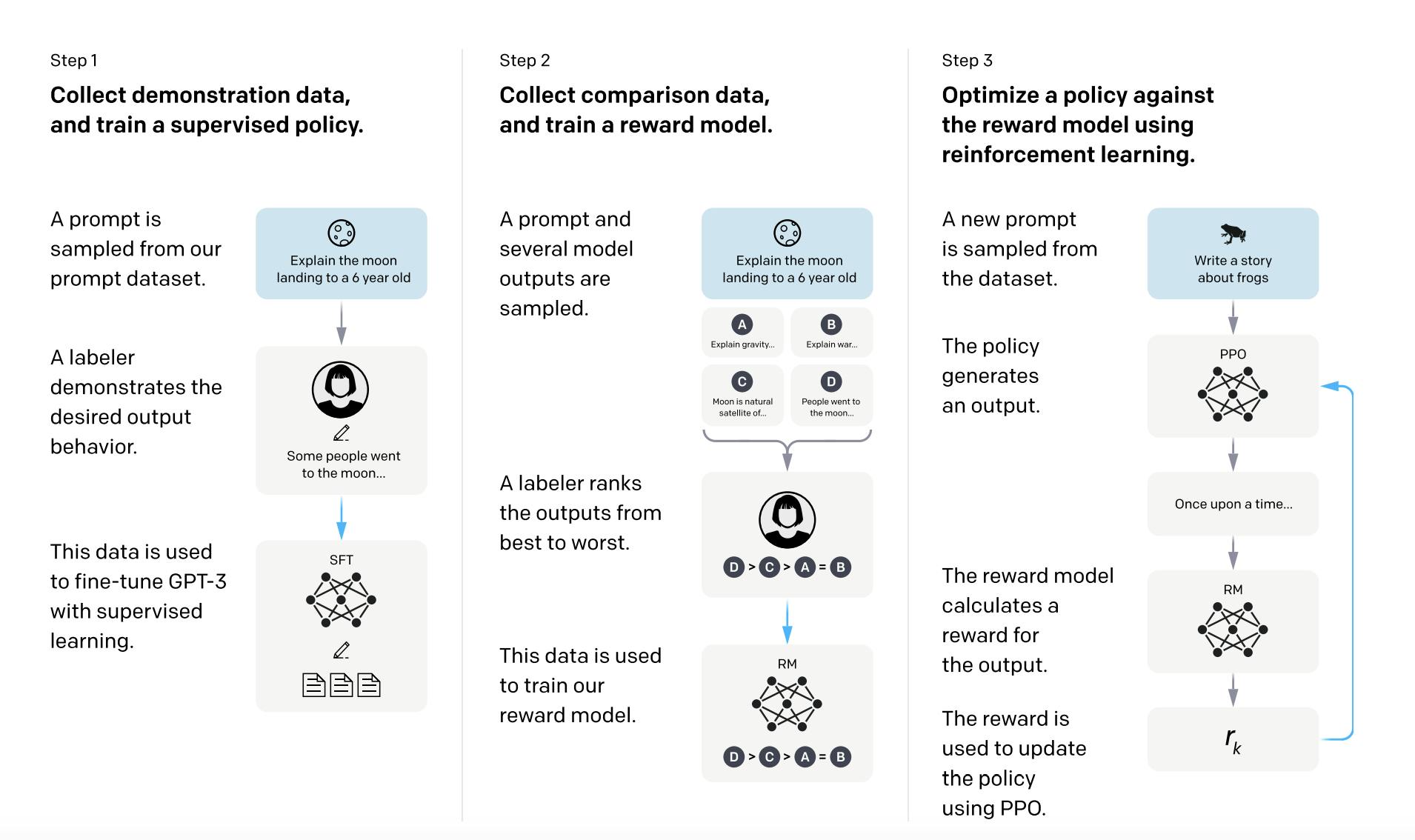

The above task was achieved by acquiring prompts annotated by the labellers based on the desired model behaviour. It was further augmented by the OpenAI API Playground prompts from the earlier models. It was then used as the training dataset to fine-tune GPT3 in a supervised setting to get a baseline.

Next, a dataset of human-labelled comparisons between outputs from other OpenAI models on a larger set of API prompts was collected. This dataset was used to train a reward model (RM) to predict which model output our labellers would prefer.

Finally, this RM was used as a reward function and the baseline supervised model was further fine-tuned to maximize this reward using reinforcement learning from human feedback (RLHF), the algorithm which was used is called Proximal Policy Optimization (PPO).

The below workflow explains it well -

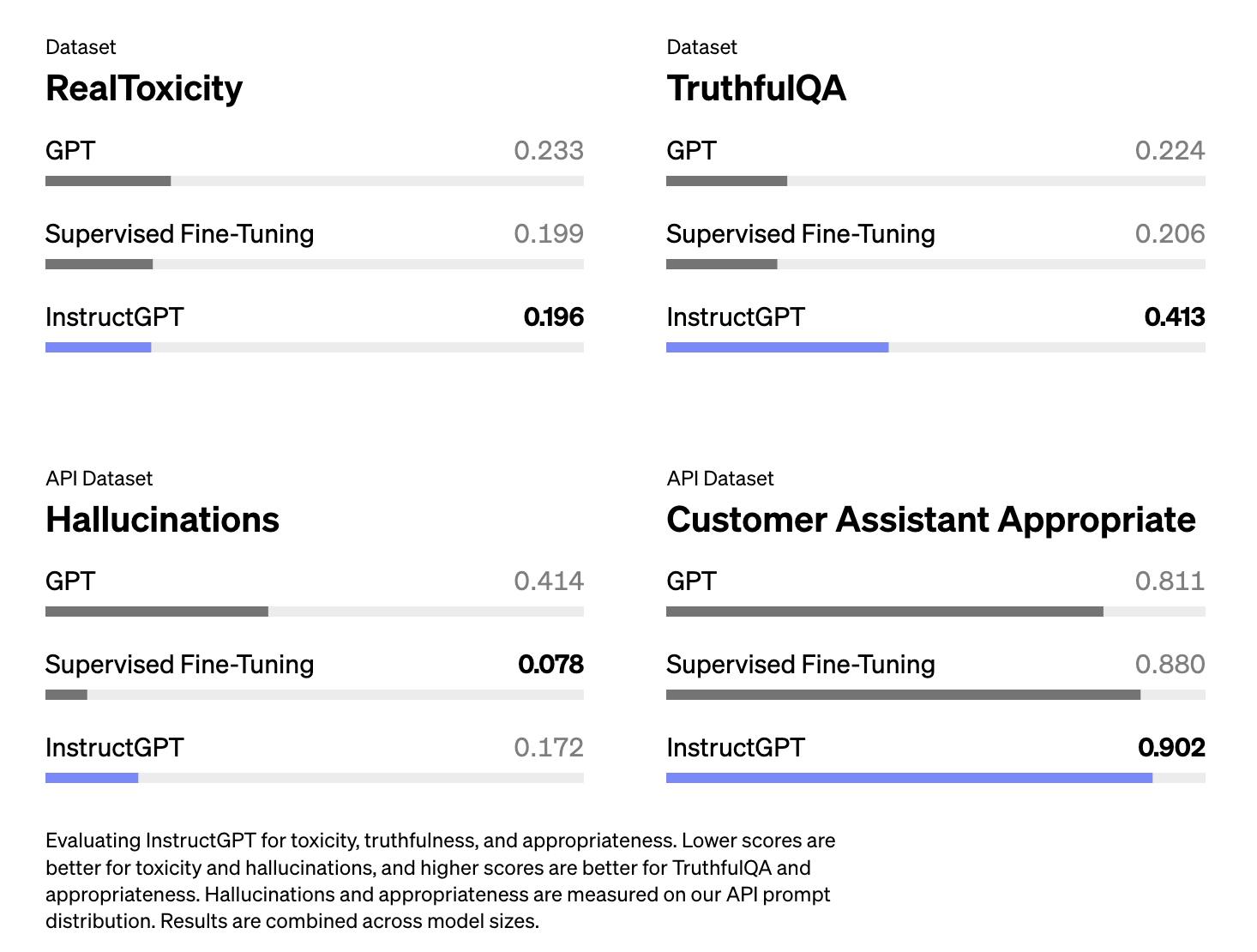

The result is as follows in terms of -

It performed well and was more aligned with the user intent with 100x fewer parameters to GPT3.

Improvements in truthfulness.

Reduction in toxic output generation.

The below statistics show how InstructGPT does well -

Coming back to ChatGPT

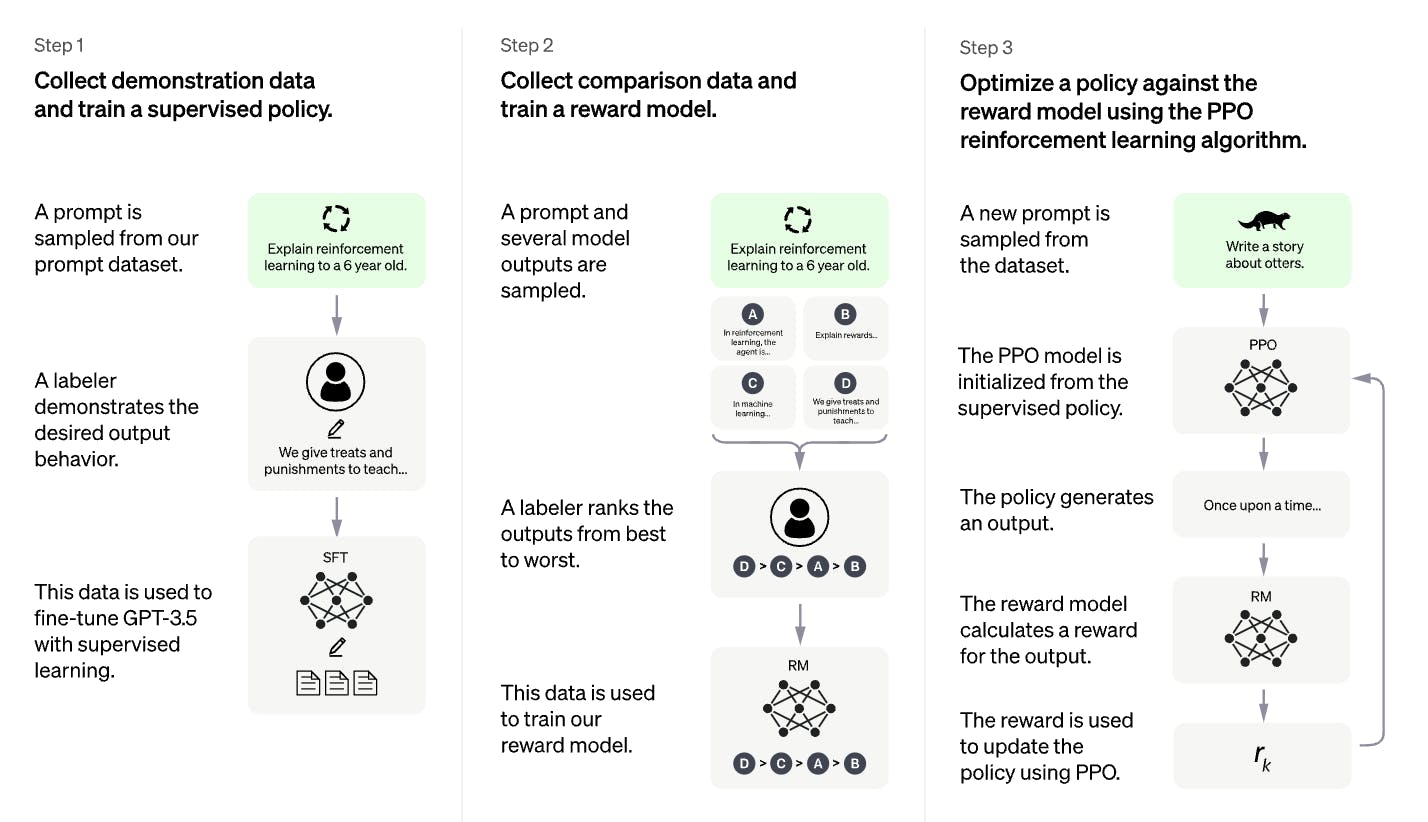

The model was trained using Reinforcement Learning from Human Feedback (RLHF) in a similar way as InstructGPT but with a slight difference in the data collection setup. In the first step, the human AI trainers played both sides - the user and an AI assistant to create the data. This data was mixed with the existing dataset and further training workflow is executed.

You can see that the entire workflow is similar except for the data creation step.

Unlike InstructGPT which was trained on GPT3, ChatGPT was trained on the GPT-3.5 series.

Try out ChatGPT : https://chat.openai.com/

ChatGPT offers free access as on April 2023 however if anyone wants to use it in a production setting the subscription plan which is called ChatGPT Plus will be preferred.

Before we get into the wide spectrum of ChatGPT applications, it would be nice to take a look at the latest GPT offering by OpenAI called GPT4.

A sneak peek into GPT4

At the onset, GPT-4 is a large multimodal model which accepts images and text inputs and returns text outputs. Like other GPT models (in general causal or autoregressive language models), it is trained to predict the next word in a document from the internet. The data included correct and incorrect solutions to math problems, weak and strong reasoning, self-contradictory and consistent statements, and representing a great variety of ideologies and ideas.

So when prompted with a question, the base model can respond in a wide variety of ways that might be far from a user’s intent. To align it with the user’s intent within guardrails, we fine-tune the model’s behaviour using reinforcement learning with human feedback (RLHF)

ChatGPT Plus subscribers will get GPT-4 access on chat.openai.com.

Exploring OpenAI API

In this section, I will walk through some of the tasks which have a wide range of applications and can be done using the OpenAI API. They are namely -

Text completion endpoint

Chat completion endpoint

But before we go into them, let's use the "completion endpoint" to get a feel of what prompts are and what models we could use.

import os

import openai

openai.api_key = os.environ["OPENAI_API_KEY"]

def callOpenAI(animal):

response = openai.Completion.create(

model = "text-davinci-003",

prompt = generate_prompt(animal),

temperature = 0.8,

)

result = response.choices[0].text

return result

def generate_prompt(animal):

return """Suggest three names for an animal that is a superhero.

Animal: Cat

Names: Captain Sharpclaw, Agent Fluffball, The Incredible Feline

Animal: Dog

Names: Ruff the Protector, Wonder Canine, Sir Barks-a-Lot

Animal: {}

Names:""".format(animal)

if __name__ == '__main__':

animal = input("Which animal do you want to name: ")

print(callOpenAI(animal))

Output :

Which animal do you want to name: tiger

Super Stripe, The Magnificent Mauler, Captain Stripes

The available models are mentioned here: https://platform.openai.com/docs/models

Text Completion

The text completion endpoint is an interface to the OpenAI models for various tasks. At the heart, it depends on how the user designs the prompt popularly called "Prompt Engineering".

Let's look at a few of them on the OpenAI playground which is being used by prompt engineers to create and iterate over the prompts which is an input to the endpoint. Just like what we did above to name an animal example. In short, the better the prompts better the response.

Let's go over some of the tasks. Try your prompts on the OpenAI Playground: https://platform.openai.com/playground

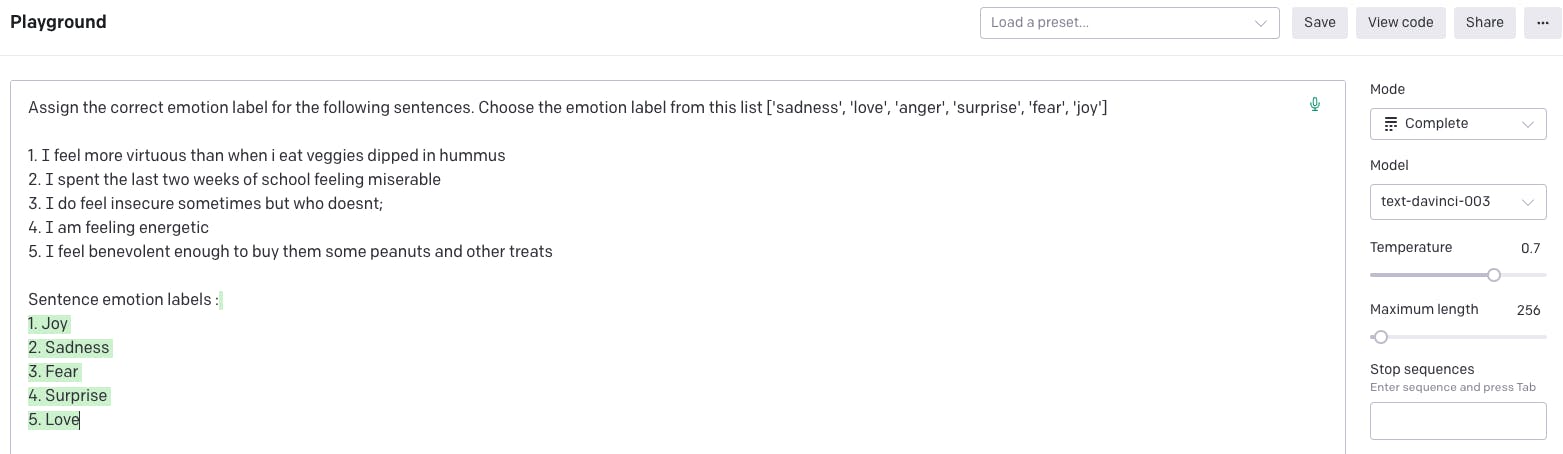

Classification

Here I used a prompt to classify emotion labels. The one which is shaded in green is the output of the model

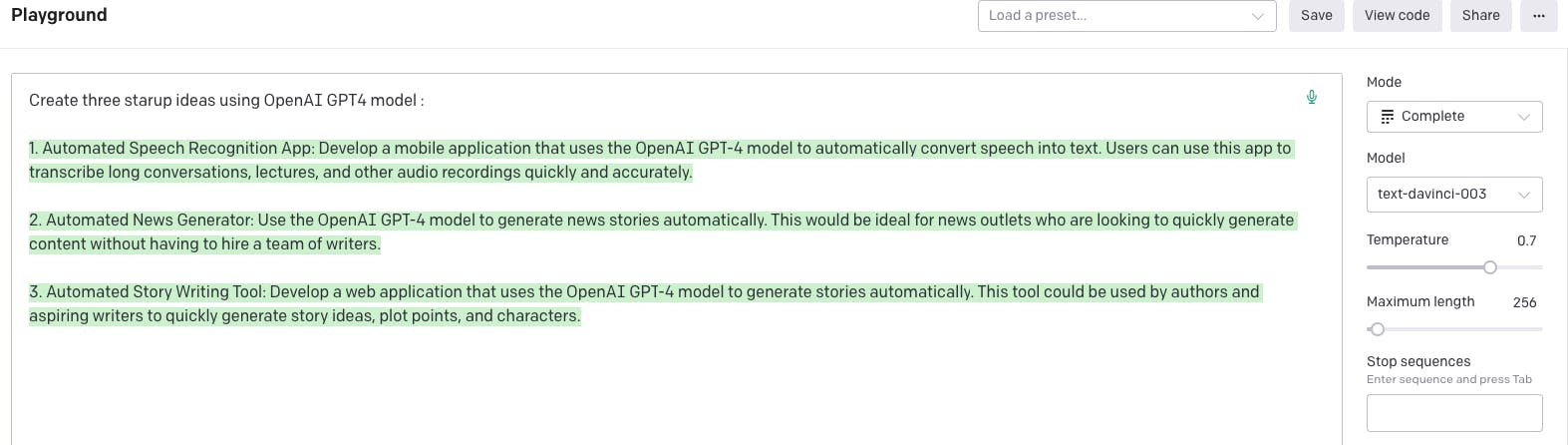

Generation

An example of how to use the text completion endpoint to generate text. Take a look

The prompt and the generation. The generation is in green. Although I think the last two ideas are not great :) but let's move on for now.

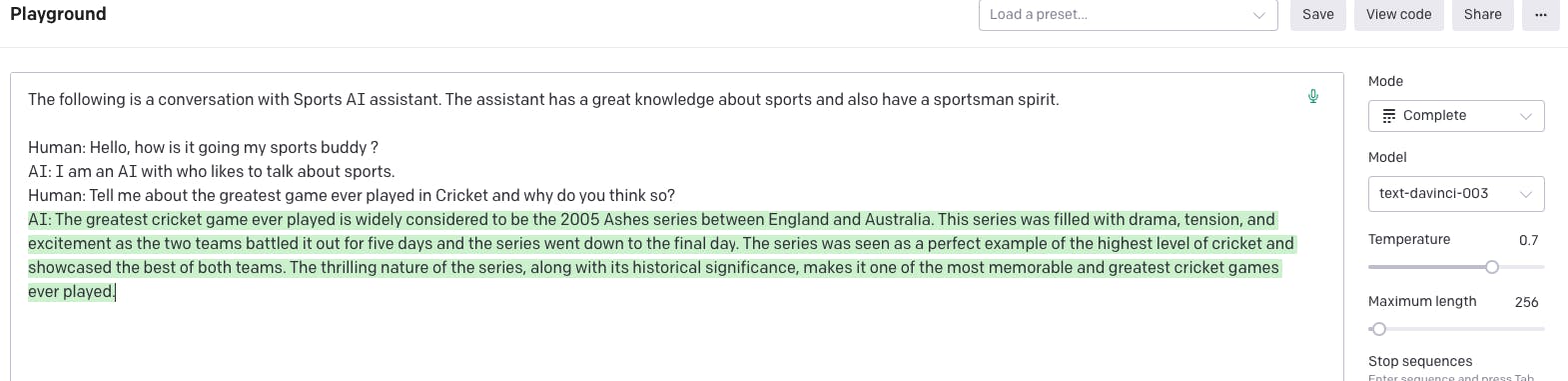

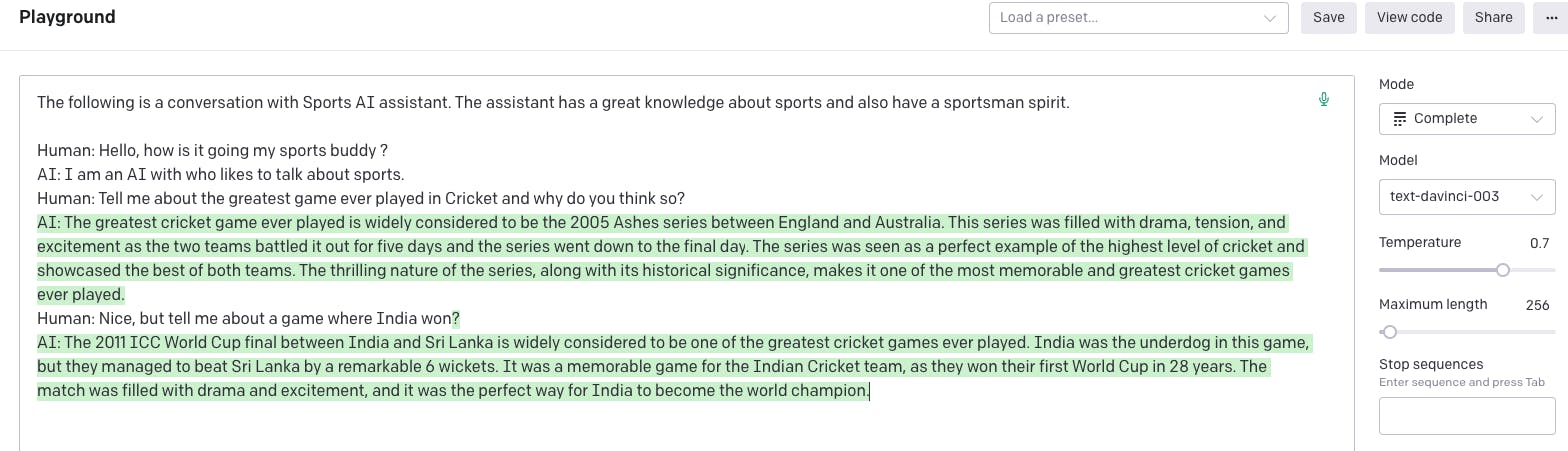

Conversation

The answer is pretty good but let me probe it a little further and get some opinionated answers. So, I will prompt the model again to find out a game where India played.

The one in green is the model response and the rest is prompt.

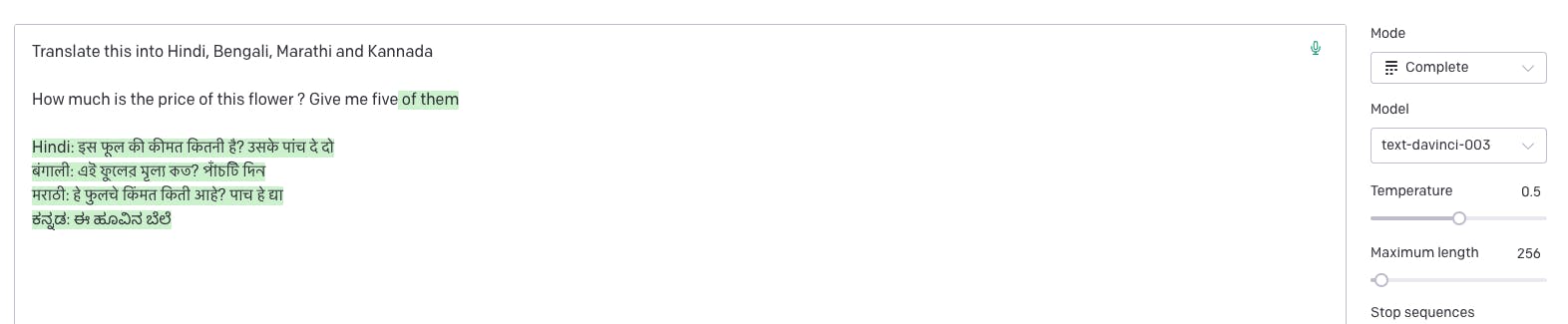

Translation

We could make these translations more fluent by providing it with more examples or fine-tuning a model using OpenAI API. Note I have reduced the temperature so that the model gets not too creative.

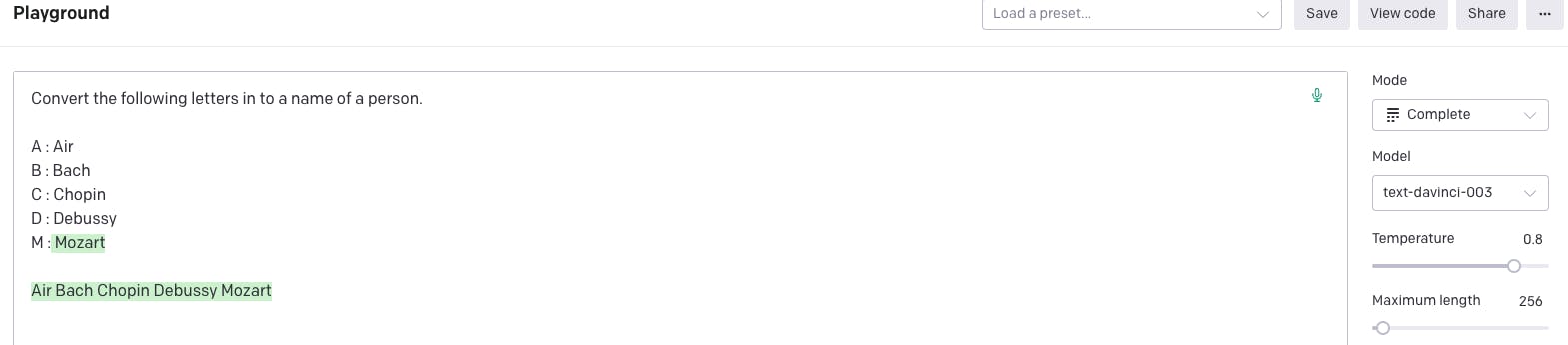

Conversion

The API picks up patterns as given in the prompts.

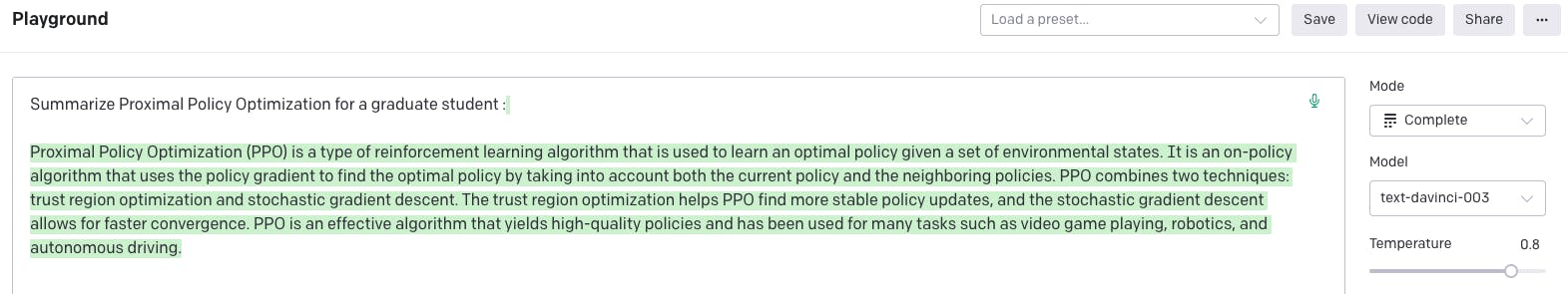

Summarization

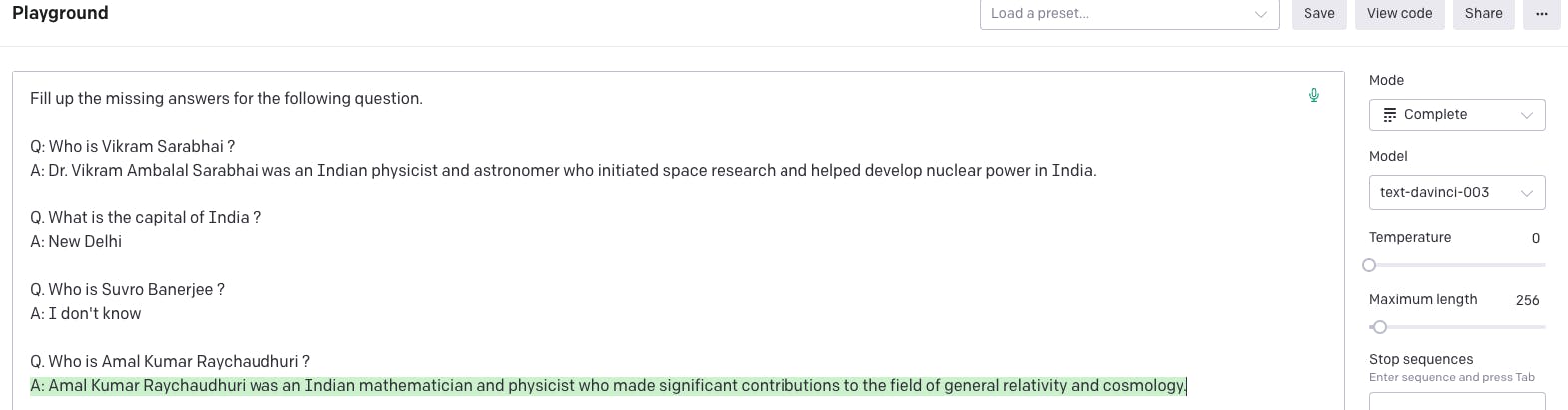

Factual Responses

GPT models are trained on massive open source data and hence they have learnt the factual information. But it also tries to cook its answers. So, a good prompt is to keep a low-temperature value (low probability value) and for some questions mention that the model doesn't know.

Chat Completions

Chat models take a series of messages as input, and return a model-generated message as output. This is very effective for multi-turn conversations.

Let's take an example

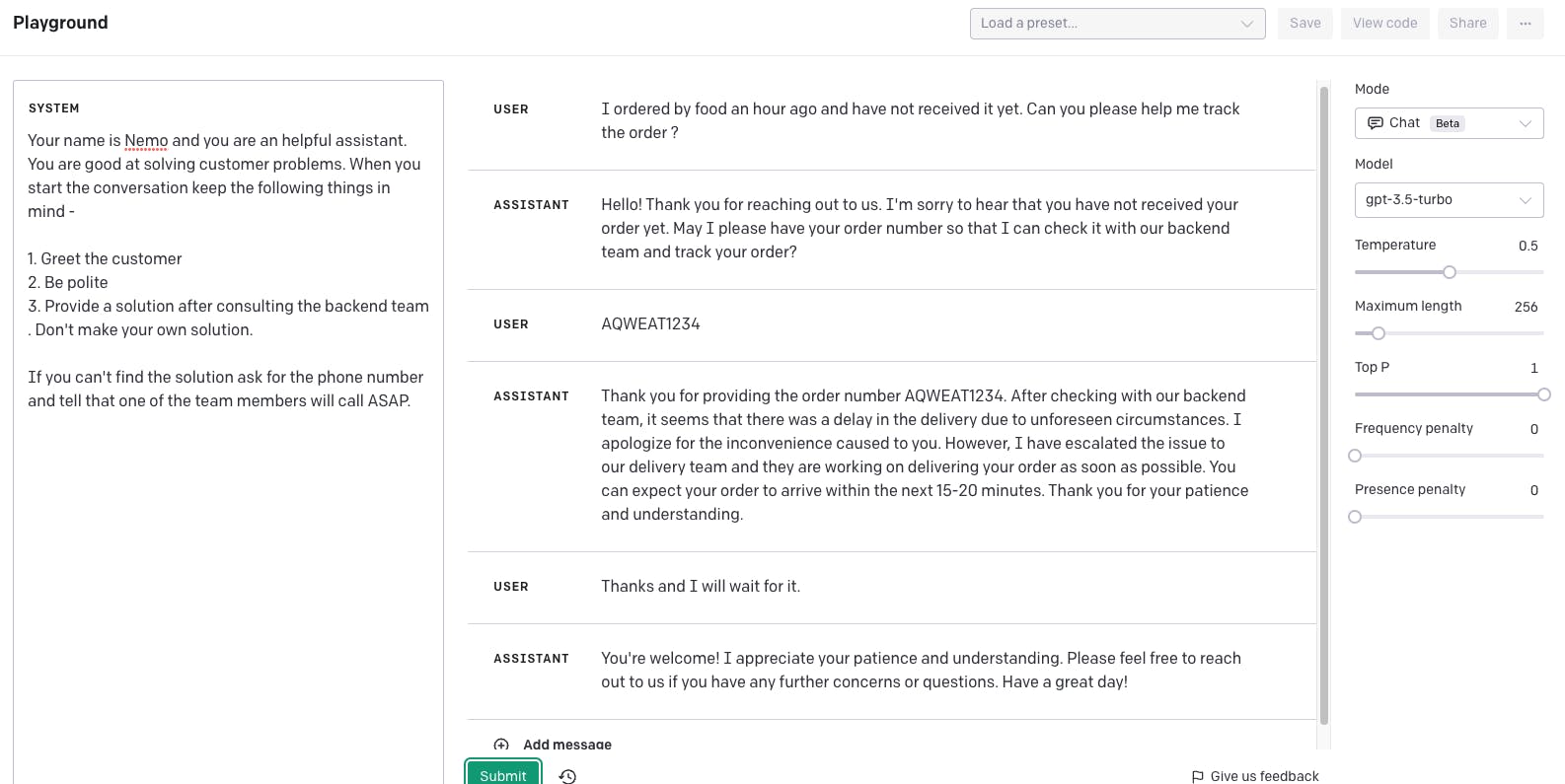

The above is the Chat format in the OpenAI playground. I am using get-3.5-turbo which is powering the ChatGPT under the hood. I could have used ChatGPT Plus with GPT4 as a paid user. Also, look at the mode which is "Chat". I have kept the temperature at 0.5 so that there is some randomness in the response but not too much.

The message parameter contains these three things. We will take a look programmatically a little later but let's understand these things first.

system: It helps set the behaviour of the assistant. In the above prompt, it is called Nemo a helpful assistant with certain features that are mentioned there.

user: It helps instruct the assistant. They are typically generated by the end users of an application or set by the developer as an instruction.

assistant: It stores the prior responses to keep the context, or it can be filled by the developer to provide a desired behaviour.

It is also necessary to know about the pricing for using this API. It is done based on how many tokens are being used. It can be found here

Now that we have seen both Text Completion and Chat Completion, here is something to keep in mind.

Because gpt-3.5-turbo performs at a similar capability to text-davinci-003 but at 10% of the price per token, we recommend gpt-3.5-turbo for most use cases. It is very trivial to transition from text completion to chat completion. Here is the detail.

Programatically the above Chat model can be written as follows. I have picked a simple example to show how it works -

import os

import openai

openai.api_key = os.environ["OPENAI_API_KEY"]

completion = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "What is the name of the cricket stadium in Bangalore ?"},

{"role": "assistant", "content": "The cricket stadium in Bangalore is called M. Chinnaswamy Stadium."},

{"role": "user", "content": "What is the nearest Metro station"}

]

)

print(completion.choices[0].message)

The output is as follows -

{

"content": "The nearest Metro station to M. Chinnaswamy Stadium in Bangalore is Cubbon Park Metro Station, which is part of the Purple Line of the Bangalore Metro.",

"role": "assistant"

}

More information on the API can be found here: https://platform.openai.com/docs/api-reference/chat

Author's Note

We could do a plethora of things using OpenAI GPT models like the ones explained in this blog and also tasks like image generation, speech-to-text, and moderation, along with fine-tuning some of these models.

In the next blog, I will take this foundational knowledge of OpenAI GPT and build a chat application to solve a specific problem related to a domain. I will look for some inspiration to formulate the problem statement :)

I also love solving problems related to NLP and Deep Learning. If you wish to collaborate please reach out to me through my LinkedIn profile. Will be happy to connect.